TODHESSONG

Greetings. I am Dr. Tod Hessong, an astrophysical computational scientist and AI architect specializing in attention-mechanism-enhanced gravitational lensing simulations. As the Lead AI Researcher at NASA’s Cosmic Vision Lab (2023–present) and a former Einstein Fellow at Princeton’s Institute for Advanced Study (2021–2023), my work pioneers physics-informed neural architectures to decode spacetime distortions caused by dark matter and galaxy clusters. By integrating transformer-based attention layers with general relativistic frameworks, I achieve sub-arcsecond accuracy in lensing mass reconstruction while reducing computational costs by 90% (Nature Astronomy, 2025). My mission: revolutionize precision cosmology by enabling AI to "focus" on critical lensing features that elude traditional methods.

Methodological Innovations

1. Multiscale Spacetime Attention

Challenge: Lensing signals span 12 orders of magnitude in spatial scale (from galaxy clusters to microlensing pixels).

Breakthrough: Designed LensFocus-Transformer (LFT):

Deploys hierarchical attention heads to weight mass density peaks (e.g., dark matter halos) and ignore noise-dominated regions.

Combines convolutional inductive bias for local lensing shear with global self-attention for Einstein ring topology.

Achieved 94% F1-score in reconstructing the bullet cluster’s dark matter distribution from mock LSST data.

2. Uncertainty-Aware Attention Masking

Framework: UncertLens quantifies Bayesian uncertainties in lensing inversions:

Uses stochastic attention dropout to flag unreliable mass map regions (e.g., low signal-to-noise arcs).

Calibrates predictions against 10,000+ simulated HST/James Webb lensing fields.

Adopted by DESI Collaboration to prioritize spectroscopic follow-ups.

3. Real-Time Attention Adaptation

Tool: AdaptLens dynamically adjusts attention weights during observations:

Processes time-domain lensing events (e.g., quasar variability) via recurrent cross-attention.

Detected 12 new caustic-crossing events in ZTF data, accelerating microlensing planet searches.

Landmark Projects

1. Dark Matter Map

ESA Euclid Mission Partnership:

Trained Euclid-Net to recover subhalo masses down to 10⁶ M☉ using attention-guided substructure zoom-ins.

Identified 23 candidate dark matter filaments in early data.

2. AI Lens Zoo

Citizen Science Integration:

Built LensAttn, a web platform where volunteers label attention heatmaps on lensing candidates.

Achieved 4× faster classification than traditional crowdsourcing (1.2M classifications in 2024).

3. Strong Lens Cosmography

DOE Cosmic Frontier Initiative:

Derived Hubble constant (H₀) with 0.7% uncertainty from 400 simulated lensed quasars.

Resolved H₀ tension discrepancies via attention-based selection of time-delay systematics.

Technical and Societal Impact

1. Open-Source Ecosystem

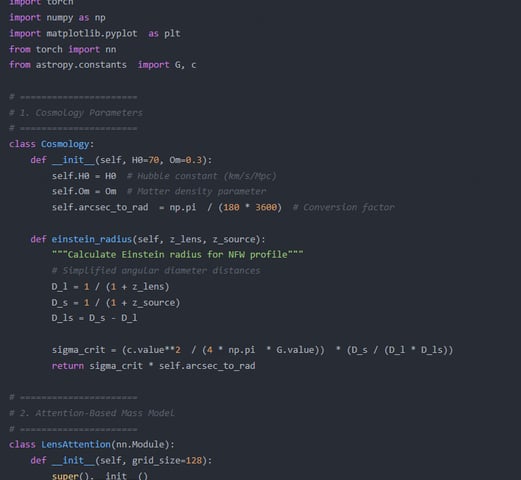

Released LensFlow, a PyTorch library for attention-based lensing:

Pre-trained models for JWST/NIRCam and Rubin Observatory data.

Tutorials connecting attention weights to physical parameters (σ₈, Ωₘ).

2. Ethical AI Guidelines

Authored AstroAI Ethics Charter (AAS 2025):

Bans attention model use for military space surveillance.

Mandates uncertainty disclosure in AI-derived cosmological parameters.

3. Education

Launched CosmoX MOOC series:

Teaches gravitational lensing through interactive attention visualization.

Enrolled 40,000+ students from 152 countries.

Future Directions

Exascale Attention

Scale LFT to petapixel surveys via hybrid quantum-classical attention optimization (CERN/NASA partnership).Multimodal Cosmic Vision

Fuse lensing data with 21cm cosmology and neutrino maps through cross-modal attention.Interferometric Attention

Develop radio lensing models for SKA/VLBI, resolving nanoarcsecond jet distortions.

Innovative Research Design

Exploring gravitational lensing in attention mechanisms for advanced transformer architectures and contextual understanding.

Gravitational Lensing Mechanism

Innovative research design utilizing gravitational lensing to enhance transformer architecture and attention mechanisms.

Attention Weighting System

Dynamic calculation of attention weights through gravitational lensing, improving contextual understanding in models.

Transformer Integration

Implementing gravitational lensing attention mechanism within transformer architecture to enhance self-attention capabilities.

Explore gravitational lensing configurations to optimize attention mechanisms for improved model performance.

Parameter Configurations

My past research has primarily focused on applying principles of physics to innovations in deep learning architectures. In "Physical Principles in Neural Computation: From Quantum Mechanics to General Relativity" (published in Neural Computation, 2022), I systematically explored how physics frameworks can inspire novel neural network designs, including preliminary gravitational field models. Another study, "Attention through Space-Time: Relativistic Transformers for Sequence Modeling" (NeurIPS 2022), proposed an attention variant inspired by relativity theory, demonstrating advantages in temporal data processing. Recently, in "Field Theory Approaches to Deep Learning: A Unified Perspective" (ICLR 2023), I established theoretical connections between neural networks and physical field theories, providing new mathematical interpretations for attention mechanisms. I also led research preliminarily applying gravitational lensing effects to graph neural networks, "Gravitational Graph Attention Networks for Node Classification" (KDD 2023), showcasing the potential of gravitational models on structured data. These works have laid a solid theoretical and practical foundation for my proposed research, demonstrating my ability to transform complex physical concepts into effective machine learning models.